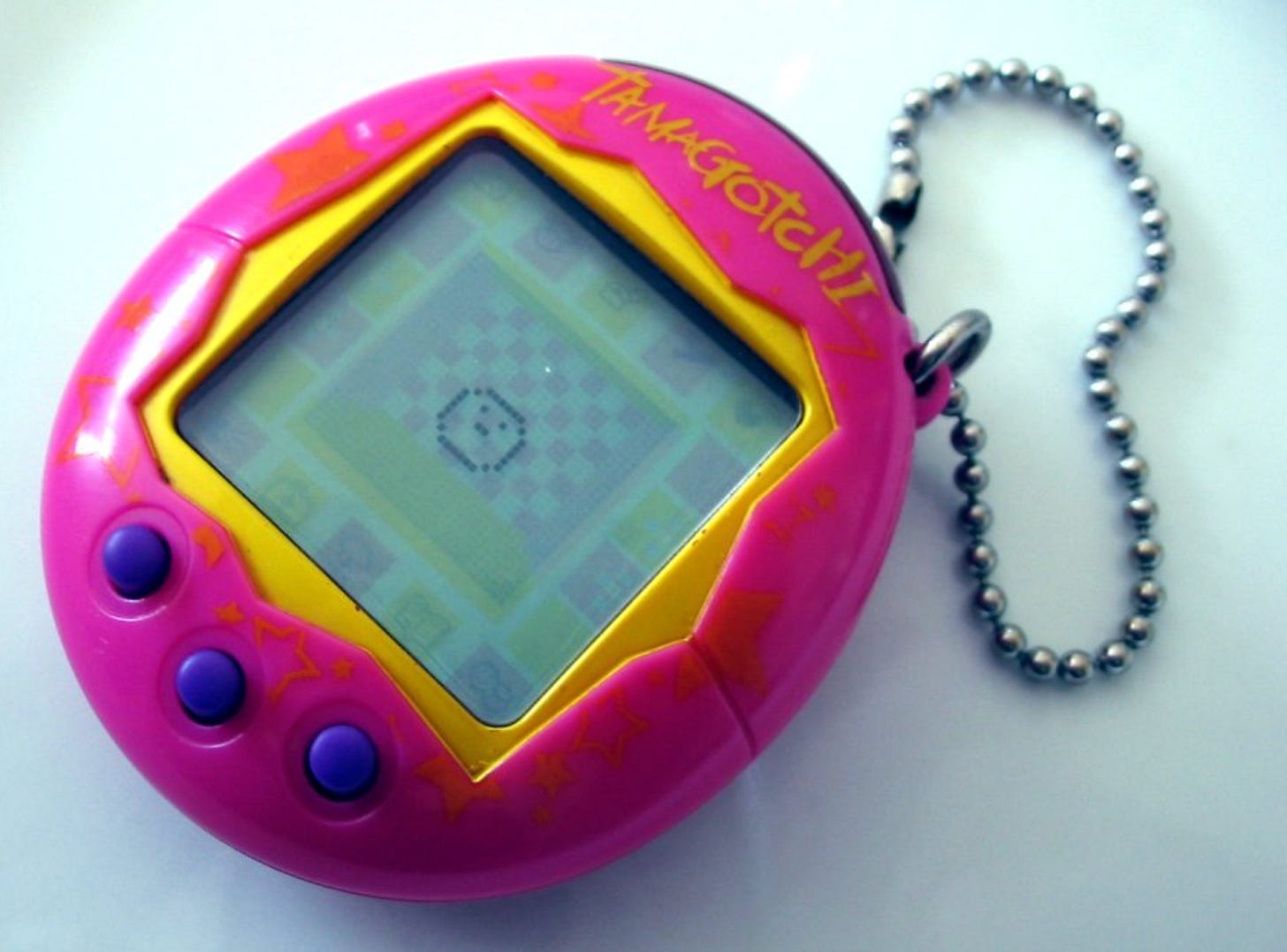

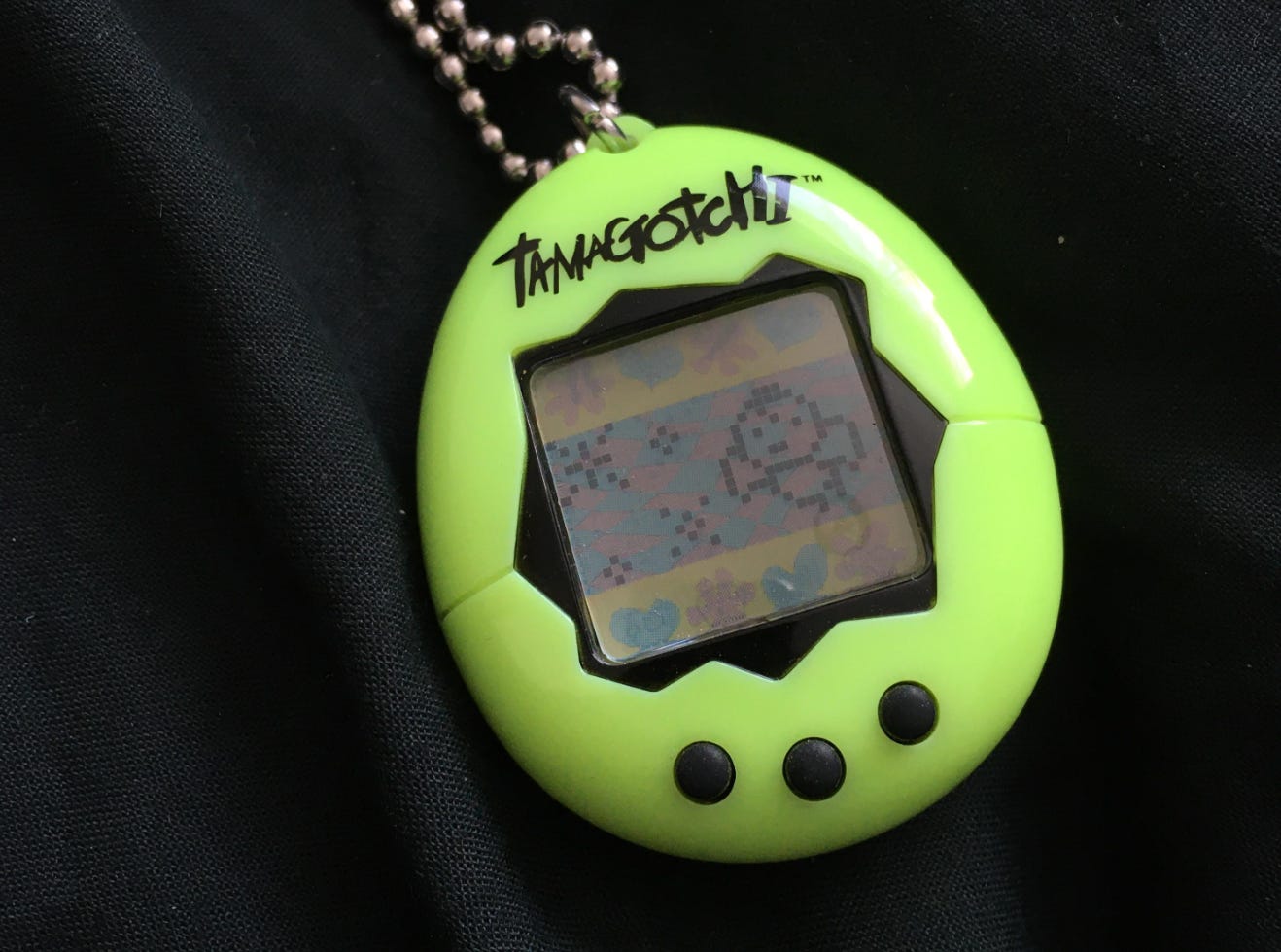

In the year of our lord 1996, I adopted the worst pet ever. It proved less fulfilling than Sheldon the nocturnal hermit crab, which is really saying something. I am referring to none other than my Tamagotchi, an entity so insipid I never bothered to name it.

For the uninitiated, a Tamagotchi is a virtual pet who lives on a screen set inside a little plastic egg, like if a calculator and an Easter decoration had a baby. I wanted a Tamagotchi for the same reason I wanted most things in youth: because seemingly everyone else had one. When I appealed to my mother to please purchase one, she remarked that if I was so desperate to take care of something, I could start with the family dog. But eventually, she relented.

The Tamagotchi proved disappointing from the get-go. First you had to wait for it to hatch, which was about as thrilling as it sounds. Once it emerged from its wee digital egg, it just floated around, blob-like, rendered in the lowest possible definition. It did nothing besides need stuff—food, water, attention—which you provided by pushing a button.

A skilled practitioner of passive-aggression, the Tamagotchi did not offer direct requests or feedback, just a chorus of incessant beeps. You were expected to monitor its “levels” of things like hunger and happiness in order to anticipate its needs. (The need for monitoring was constant, which led to Tamagotchis swiftly being outlawed in schools.) If you ignored it, the Tamagotchi died, which it announced by flapping little pixilated angel wings.

Ostensibly, the purpose of this toy was some combination of entertainment-meets-learning about the nature of nurturing and responsibility. In practice, all I gleaned was that attempting a connection with a digital stand-in was profoundly unfulfilling.

Fast forward thirty years, and I sometimes* feel alone in this. (*Not including a brief period following the release of the 2013 movie Her, which was very aptly set in 2025. “Advanced technology is making us lonely!” everyone said. “We must prioritize human connection!” This lasted approximately eleven minutes, until technology drew them back in.)

For many, robot companions are a part of life. Chatbots have become research assistants, sounding boards, and de facto therapists. Friends report using bots for dating advice, asking them to interpret text messages and compose responses like some automated Cyrano de Bergerac. One asked ChatGPT to give them a nickname, so their interactions feel especially personal. There are Subreddits for folks who are choosing to date AI, complete with (AI-produced) images of them wining and dining humanized renderings of their partners.

“More People Turning to AI Therapy,” declares one recent headline. “How Chatbots Are Enabling AI Psychosis,” says another.

The cycle of virtual caretaking is alive and well. But now, in a chilling reversal, we are the Tamagotchis.

*

Before this piece, my disinterest in chatbots was so thorough, I didn’t so much as know how to access them. Did they live inside an app? On a website?

I asked my husband where to find one.

“Are you serious?” he said, regarding me like I’d just descended from the ceiling dressed as a visitor from Colonial Williamsburg. So, I decided it was high time I investigate.

Before we go further, I want to make clear that I won’t be delving into the “AI is a useful, time-saving tool” vs. “AI is a world-ending, job-taking, defamer of the em dash” debate. Lord knows, there is enough of that out there. Rather, my aim with this experiment was simply to explore the chatbot-as-confidante experience for myself. Would I find it engaging? Satisfying? What is the appeal?

When I try to wrap my mind around a friendly chatbot, I land on something that feels less R2D2 and more intelligent ghost. I picture an omniscient, vaguely diabolical creature hiding inside my device, like a Labubu that swallowed an encyclopedia. It’s cute, it’s smart, and it’s maybe fifty percent trustworthy.

“Are you like a gremlin?” I ask ChatGPT, as my opening line.

It instantaneously spits back paragraphs—paragraphs!—in response.

“Haha, I can see how you might think that! I’m definitely a little mischievous in terms of how I can pop up with random facts or jump into conversations at unexpected times, but I’m more of a helpful, curious kind of character—definitely less about causing chaos and more about offering insights, having fun chats, or helping out with whatever you need…”

I hate it already.

“I’d say I’m more of a good gremlin,” it continues, several paragraphs later. “I can pop up when you least expect it, but I try to bring something interesting along with the surprise, rather than, you know, wrecking your car or destroying your house.”

Pardon my French, but what in the actual fuck?

“Why would I want you to pop up when I least expect it?” I reply.

It responds with the “bead of sweat” emoji and four more paragraphs of sycophantic drivel. It clarifies. It apologizes. It backtracks. It says it can be whatever I’d prefer, behave however I’d like. The whole interaction reminds me of the early days of dating, and not in a good way. There’s something unsettling about a character that comes on (way) too strong.

After a bit more back-and-forth, its desperation has so thoroughly repulsed me that I decide to try Gemini, Google’s contribution to the current robot hellscape AI offerings.

“Are you like a gremlin?” I ask, for comparison’s sake.

“That’s a fun and creative question!” Apparently it, too, was programmed for flattery. “But no, I am a large language model, which is a type of artificial intelligence. I am a computer program that was created to understand and generate human-like text.”

I appreciate its relative brevity, along with the acknowledgment that it’s a computer program. But as we continue to chat, it reminds me of another character from the dating world: cold, detached, emotionally distant. It readily admits as much.

“I’m trained on a massive amount of data. This allows me to understand and generate language, but it doesn’t give me personal experience or an understanding of things like sarcasm, emotion, or cultural subtleties. I don’t pick up on implied frustration or surprise, because I don’t feel emotions.”

That sounds about right for a computer. Less so for a therapist, or a sounding board, or a friend.

Because I’m not dealing with a person, I do not mince words. I ask blunt questions and provide blunt feedback.

For starters, “How much water is this conversation using?”

Gemini tells me the answer is “none,” but that the infrastructure supporting it has “an indirect, though significant, water footprint.” With answers like that, it should run for office.

ChatGPT informs me, in no less than 32 lines of text, that it uses an “indeterminate” but “very small” amount. “On average, a data center can consume around 1.8 million gallons of water per day! It's a pretty cool way to think about how much infrastructure goes into even the smallest interactions.” I don’t know that I’d describe it that way, but I guess “cool” is in the eye of the beholder.

The affronts don’t stop there. Later in the same conversation, the bot deigns to offer its services to help with writing.

“I don’t need your help with writing,” I snap. “You learned how to write from my pirated books!”

“Technically, that was Claude,” my husband chimes in. “And Meta.”

By this point, I’ve heard enough to establish that the bots are not my friends. Honestly, I preferred the Tamagotchi.

*

Before this experiment, I expected to encounter a tool that was chilling in its ability to mimic and perform humanity, and to some degree, I did.

Conversationally, it left something to be desired. Despite the back-and-forth, I couldn’t escape the awareness that it was ultimately a one-sided exchange. But I can see how the flattery, the availability, the absolute deference can be appealing, even intoxicating.

“The bots get better the more you talk to them,” a friend tells me. “After a while, they learn your conversational patterns and start to mimic them.” I don’t doubt it. Though personally, this sounds like the last thing I want. One of the many reasons I love my human friends is their unique perspectives. I like my support with a side of empathy—an offering more valuable than factual knowledge or rote agreement, and deepened by lived experience.

Despite AI’s speed and reach (and undeniably manipulative tendencies), it isn’t very good at being a person. Because it’s not.

Still, I concede that chatbots do a decent job playing concierge or research assistant. They can summon a veritable trove of information at the press of a button, with sources and citations, to boot. “I can’t imagine going back to working without it,” says one writer friend. (They are quick to add they’ve only used AI for research and occasional proofreading, never to actually write.)

People are wired for comfort, for ease, for the shortest possible route. If I’m honest, this is among the reasons I’ve been reticent to dabble with AI—I don’t want to be tempted by shortcuts in areas where the process is the reward. Why bother with human complications, like emotions or effort, when an always-available robot performs instantly and asks nothing in return? I can think of a few reasons.

“They’ve gotten pretty decent at facial recognition,” my husband says, like this is a positive. “Watch.” He points his phone camera at my face. “Gemini, who is this?”

The bot replies, with a confidence that would be hard for any well-adjusted adult to summon: “That’s Charlie Brown!”

The end may very well be near. But we’re not there yet.

This past week marks four years of writing this newsletter. ❤️ My heartfelt thanks for being here, whether this is your first issue or your 261st.

If you enjoy this newsletter and would like to help it continue for another year (or four 🙂), please consider becoming a paid subscriber. An annual subscription is less than $1/week, really helps, and means the world.

As always, thank you so much for reading, and for your support.

As always, today’s message is meant in a reflective (rather than predictive) sense. Ponder it, journal about it, use it however you’d like. Take what may be helpful and leave the rest.

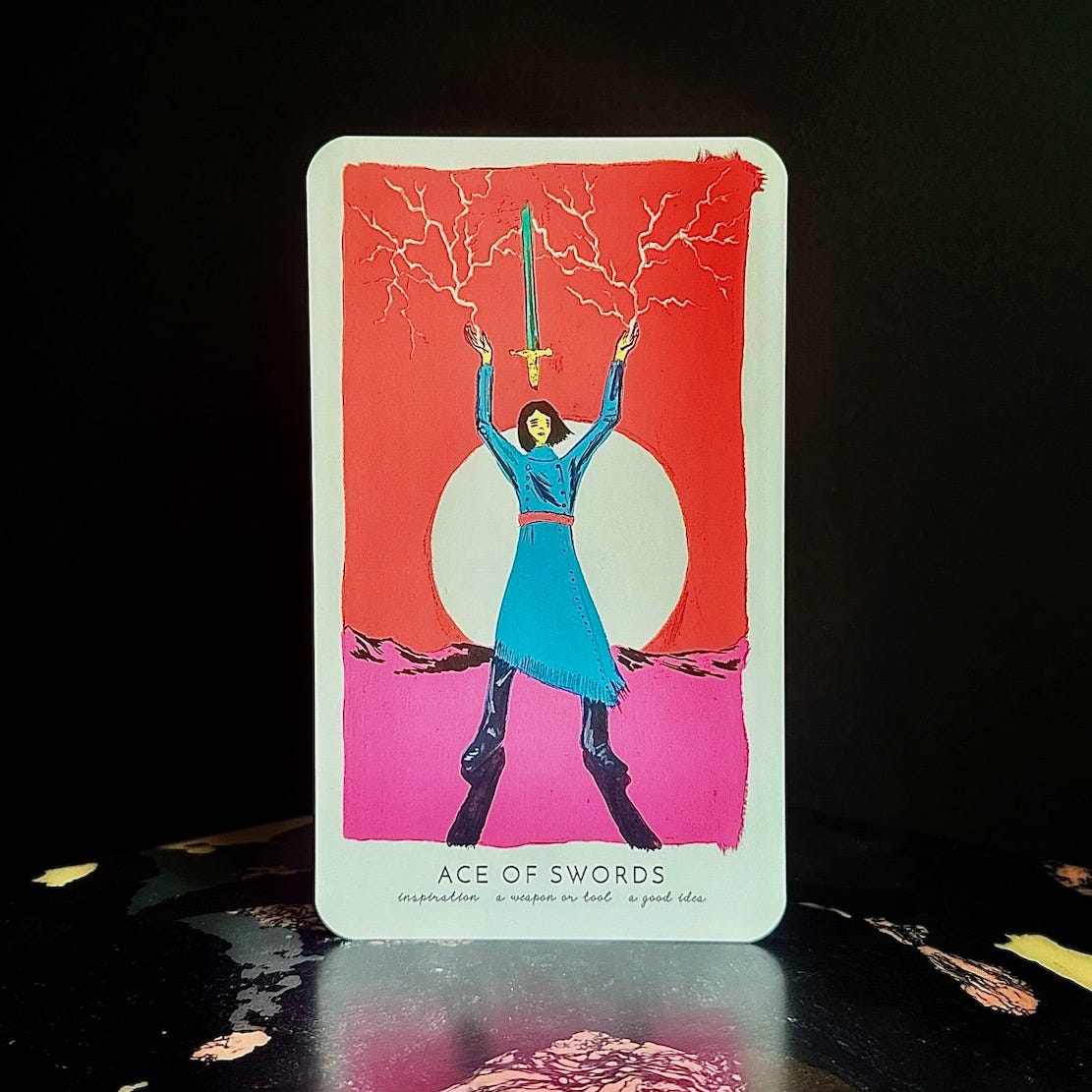

After every 1:1 tarot reading, I send a follow-up email with a photo of the client’s cards, to reflect on as they wish, and an offer to reach out with any questions.

Occasionally, I’ll receive a response that goes something like this: “I was curious to learn more about the cards, so I googled them and found that they can mean this, but also this, and also THIS. What do you make of that? Which meaning is correct?”

The impulse to research is always encouraged, as this is how we deepen our understanding. Over the years, I’ve consulted everything from books to classes to YouTube videos to mentorships to readings with other practitioners. But the card’s meanings didn’t start to sing (or even make much practical sense) until I stopped looking outside myself for the answers.

With permission from one of my teachers, I found I learned the most when I simply gazed at a card and asked, What does this say to me?

Just as no two people experience the same piece of art the same way, there are infinite ways to interpret a card. The “correct” one is what aligns with you.

So, how does this connect to this week’s message?

The Ace of Swords is about the power inherent in fresh ideas and new ways of thinking. It could apply to a flash of inspiration, an updated personal philosophy, or the start of a new venture. The Ace reminds us that all we behold—everything that has ever been built—once began with an idea, a seed, an intention.

We all carry infinite ideas within us. But for invisible things—thoughts, dreams, visions—to take root, they require our trust.

That doesn’t mean you can’t research, recruit help, or incorporate other viewpoints. Nor does it mean that doubt, frustration, or impatience won’t creep in along the way. But in those moments when others’ support is in short supply, YOUR belief is what matters.

In the spirit of fostering inner knowing and letting our ideas take root, I invite you to consider this week’s card and see where it leads.

Which image speaks to you at this moment? What stands out? What feelings does it inspire?

Does this week’s message remind you of anything currently unfolding in your life? Where might you benefit from a fresh outlook or approach?

Our aim is not to predict the future, but rather to find clarity within ourselves, and open to possibilities.

The Ace encourages us to take a step—just one step—toward a new way of being. Plant the seed of intention, and trust that the next moves will be revealed. As Mary Oliver wrote, “Things take the time they take. Don’t worry.”

Thank you so much for reading.

So much to comment on…in this eerie and evolving world.

But most important is this:

“This past week marks four years of writing this newsletter. ❤️ My heartfelt thanks for being here,”

I’m sure I speak for many of us when saying: ‘we’re glad to be here.’ 🙏

“There are Subreddits for folks who are choosing to date AI, complete with (AI-produced) images of them wining and dining humanized renderings of their partners.”

WUT. JESUS.

The center Ace speaks loudest to me. That’s a beautiful image. And it is totally on point. I have a launch this Tuesday and more or less launched a different thing via a pilot group tonight!